Qualcomm® AI Hub

Qualcomm AI Hub contains a large collection of pretrained AI models that are optimized to run on Dragonwing hardware on the NPU.

Finding supported models

Models in AI Hub are categorized by supported Qualcomm chipset. To see models that will run on your development kit:

1️⃣ Go to the model list.

2️⃣ Under 'Chipset', select:

- RB3 Gen 2 Vision Kit: 'Qualcomm QCS6490 (Proxy)'

- RUBIK Pi 3: 'Qualcomm QCS6490 (Proxy)'

Deploying a model to NPU (Python)

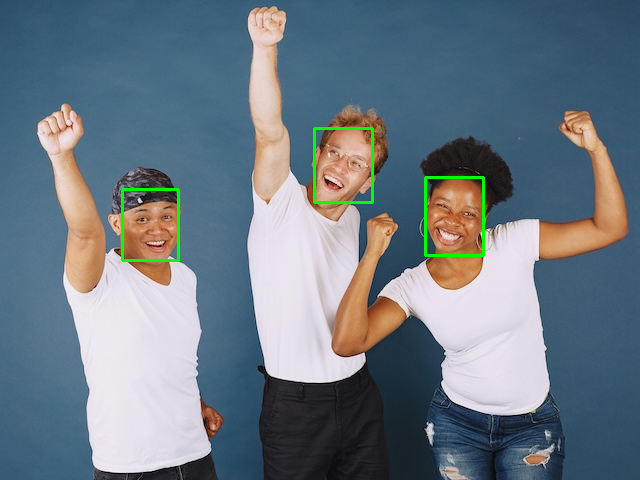

As an example, let's deploy the Lightweight-Face-Detection model.

Running the example repository

All AI Hub models come with an example repository. This is a good starting point, as it shows exactly how to run the model. It shows what the input to your network should look like, and how to interpret the output (here, to map the output tensor to bounding boxes). The example repositories do NOT run on the NPU or GPU yet - they run without acceleration. Let's see what our input/output should look like before we move this model to the NPU.

On the AI Hub page for Lightweight-Face-Detection, click "Model repository". This links you to a README file with instructions on how to run the example repository.

To deploy this model, open the terminal on your development board, or an ssh session to your development board, and:

1️⃣ Create a new venv and install some base packages:

mkdir -p ~/aihub-demo

cd ~/aihub-demo

python3 -m venv .venv

source .venv/bin/activate

pip3 install numpy setuptools Cython shapely

2️⃣ Download an image with a face (640x480 resolution, JPG format) onto your development board, e.g. via:

wget https://cdn.edgeimpulse.com/qc-ai-docs/example-images/three-people-640-480.jpg

Input resolution: AI Hub models require correctly sized inputs. You can find the required resolution under "Technical Details > Input resolution" (in HEIGHT x WIDTH (here 480x640 => 640x480 for wxh)); or inspect the size of the input tensor on the TFLite or ONNX file.

3️⃣ Follow the instructions under 'Example & Usage' for the Facial Landmark Detection model:

# Install the example (add --no-build-isolation)

pip3 install --no-build-isolation "qai-hub-models[face-det-lite]"

# Run the example

# Use --help to see all options

python3 -m qai_hub_models.models.face_det_lite.demo --quantize w8a8 --image ./three-people-640-480.jpg --output-dir out/

You can find the output image in out/FaceDetLitebNet_output.png.

If you're connected over ssh, you can copy the output image back to your host computer via:

# Find IP via: ifconfig | grep -Eo 'inet (addr:)?([0-9]*\.){3}[0-9]*' | grep -Eo '([0-9]*\.){3}[0-9]*' | grep -v '127.0.0.1'

# Then: (replace 192.168.1.148 by the IP address of your development kit)

scp ubuntu@192.168.1.148:~/aihub-demo/out/FaceDetLitebNet_output.png ~/Downloads/FaceDetLitebNet_output.png

4️⃣ Alright! We have a working model. For reference, on the RUBIK Pi 3, running this model takes 189.7ms per inference.

Porting the model to NPU

Now that we have a working reference model, let's run it on the NPU. There are three parts that you need to implement.

1️⃣ You need to preprocess the data, e.g. convert the image into features that you can pass to the neural network.

2️⃣ You need to export the model to ONNX or TFLite, and run the model through LiteRT or ONNX Runtime.

3️⃣ You need to postprocess the output, e.g. convert the output of the neural network to bounding boxes of faces.

The model is straight forward, as you can read in the LiteRT and ONNX Runtime pages. However, the pre- and post-processing code might not be...

Preprocessing inputs

For image models most AI Hub models take a matrix of (HEIGHT, WIDTH, CHANNELS) (LiteRT) or (CHANNELS, HEIGHT, WIDTH) (ONNX) scaled from 0..1. If you have 1 channel, convert the image to grayscale first. If your model is quantized (most likely) you'll also need to read zero_point and scale, and scale the pixels accordingly (this is easy in LiteRT as they contain the quantization parameters, but ONNX does not have these). Typically you'll end up with data scaled linearly 0..255 (uint8) or -128..127 (int8) for quantized models - so that's relatively easy. A function that demonstrates all this in Python can be found below in the example code (def load_image_litert).

HOWEVER... This is not guaranteed; and this is where the AI Hub example code comes in. Every AI Hub example contains the exact code used to scale inputs. In our current example - Lightweight-Face-Detection - the input is shaped (480, 640, 1). However, if you look at the preprocessing code the data is not converted to grayscale, but instead only the blue channel of an RGB image is taken:

img_array = img_array.astype("float32") / 255.0

img_array = img_array[np.newaxis, ...]

img_tensor = torch.Tensor(img_array)

img_tensor = img_tensor[:, :, :, -1] # HERE WE TAKE BLUE CHANNEL, NOT CONVERT TO GRAYSCALE

These kind of things are very easy to get wrong. So if you see non-matching results between your implementation and the AI Hub example: read the code. This applies even more for non-image inputs (e.g. audio). Use the demo code to understand what the model actually expects.

Postprocessing outputs

The same applies to postprocessing. For example, there's no standard way of mapping the output of a neural network to bounding boxes (to detect faces). For Lightweight-Face-Detection you can find the code here: face_det_lite/app.py#L77.

If you're targeting Python, it's often easiest to copy the postprocessing code into your application; as AI Hub has a lot of dependencies that you might not want. In addition the postprocessing code operates on PyTorch tensors, and your inference runs under LiteRT or ONNX Runtime; thus, you'll need to change some small aspects. We'll show this just below in the end-to-end example.

End-to-end example (Python)

With the explanation behind us, let's look at some code.

1️⃣ Open a terminal on your development board, and set up the base requirements for this example:

# Create a new fresh directory

mkdir -p ~/aihub-npu

cd ~/aihub-npu

# Create a new venv

python3 -m venv .venv

source .venv/bin/activate

# Install the LiteRT runtime (to run models) and Pillow (to parse images)

pip3 install ai-edge-litert==1.3.0 Pillow

# Download an example image

wget https://cdn.edgeimpulse.com/qc-ai-docs/example-images/three-people-640-480.jpg

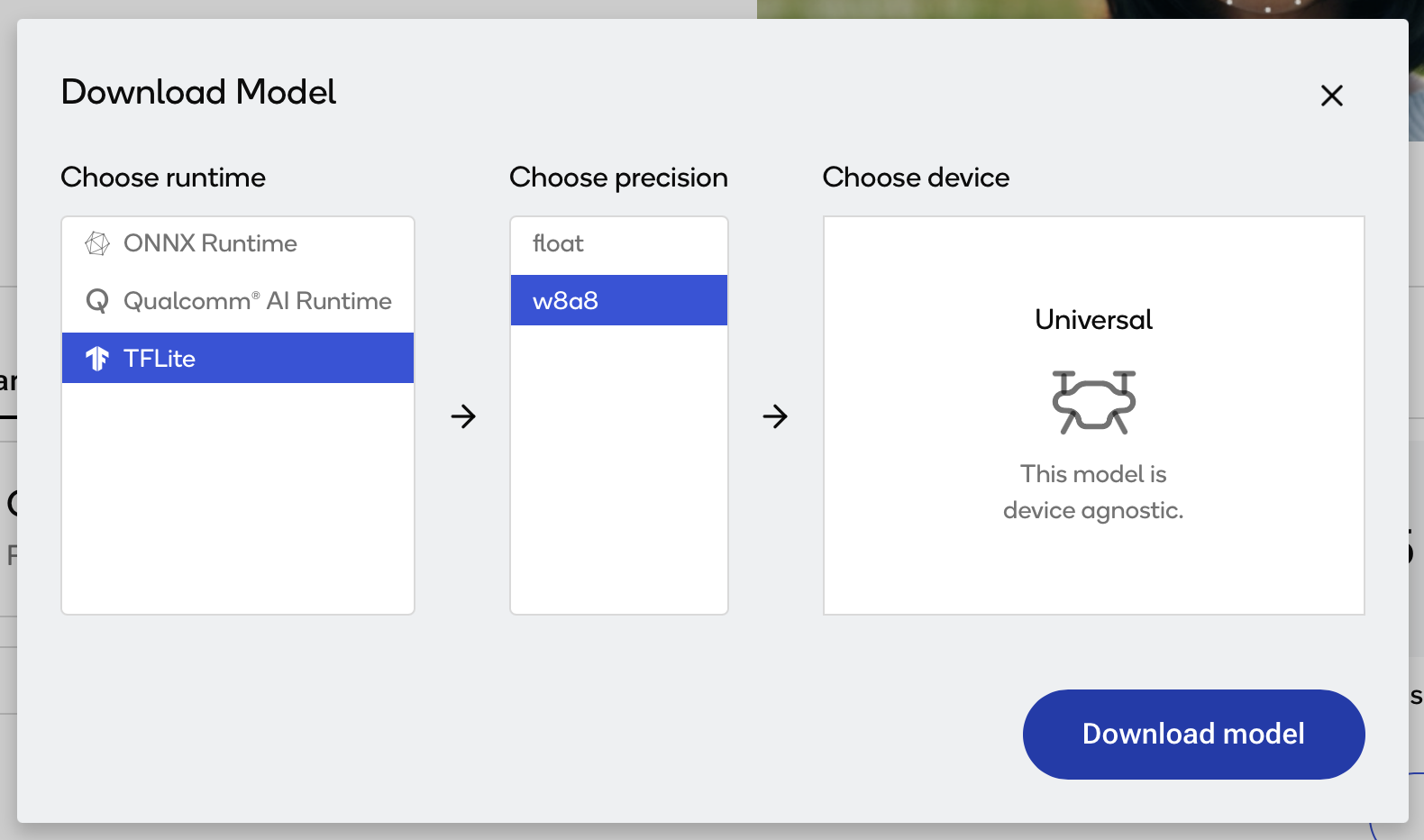

2️⃣ The NPU only supports uint8/int8 quantized models. Fortunately AI Hub contains pre-quantized and optimized models already. You can either:

-

Download the model for this tutorial (mirrored on CDN):

wget https://cdn.edgeimpulse.com/qc-ai-docs/models/face_det_lite-lightweight-face-detection-w8a8.tflite -

Or, for any other model - download the model from AI Hub and push to your development board: a. Go to Lightweight-Face-Detection. b. Click "Download model". c. Select "TFLite" for runtime, and "w8a8" for precision.

If your model is only available in ONNX format, see Run models using ONNX Runtime for instructions. The same principles as in this tutorial apply. d. Download the model. e. If you're not downloading the model directly on your Dragonwing development board, you'll need to push the model over ssh: i. Find the IP address of your development board. Run on your development board:

ifconfig | grep -Eo 'inet (addr:)?([0-9]*\.){3}[0-9]*' | grep -Eo '([0-9]*\.){3}[0-9]*' | grep -v '127.0.0.1'

# ... Example:

# 192.168.1.253ii. Push the .tflite file. Run from your computer:

scp face_det_lite-lightweight-face-detection-w8a8.tflite ubuntu@192.168.1.253:~/face_det_lite-lightweight-face-detection-w8a8.tflite

3️⃣ Create a new file face_detection.py. This file contains the model invocation, plus the preprocessing and postprocessing code from the AI Hub example (see inline comments).

import numpy as np

from ai_edge_litert.interpreter import Interpreter, load_delegate

from PIL import Image, ImageDraw

import os, time, sys

def curr_ms():

return round(time.time() * 1000)

# Paths

IMAGE_IN = 'three-people-640-480.jpg'

IMAGE_OUT = 'three-people-640-480-overlay.jpg'

MODEL_PATH = 'face_det_lite-lightweight-face-detection-w8a8.tflite'

# If we pass in --use-qnn we offload to NPU

use_qnn = True if len(sys.argv) >= 2 and sys.argv[1] == '--use-qnn' else False

experimental_delegates = []

if use_qnn:

experimental_delegates = [load_delegate("libQnnTFLiteDelegate.so", options={"backend_type":"htp"})]

# Load TFLite model and allocate tensors

interpreter = Interpreter(

model_path=MODEL_PATH,

experimental_delegates=experimental_delegates

)

interpreter.allocate_tensors()

# Get input and output tensor details

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# === BEGIN PREPROCESSING ===

# Load an image (using Pillow) and make it in the right format that the interpreter expects (e.g. quantize)

# All AI Hub image models use 0..1 inputs to start.

def load_image_litert(interpreter, path, single_channel_behavior: str = 'grayscale'):

d = interpreter.get_input_details()[0]

shape = [int(x) for x in d["shape"]] # e.g. [1, H, W, C] or [1, C, H, W]

dtype = d["dtype"]

scale, zp = d.get("quantization", (0.0, 0))

if len(shape) != 4 or shape[0] != 1:

raise ValueError(f"Unexpected input shape: {shape}")

# Detect layout

if shape[1] in (1, 3): # [1, C, H, W]

layout, C, H, W = "NCHW", shape[1], shape[2], shape[3]

elif shape[3] in (1, 3): # [1, H, W, C]

layout, C, H, W = "NHWC", shape[3], shape[1], shape[2]

else:

raise ValueError(f"Cannot infer layout from shape {shape}")

# Load & resize

img = Image.open(path).convert("RGB").resize((W, H), Image.BILINEAR)

arr = np.array(img)

if C == 1:

if single_channel_behavior == 'grayscale':

# Convert to luminance (H, W)

gray = np.asarray(Image.fromarray(arr).convert('L'))

elif single_channel_behavior in ('red', 'green', 'blue'):

ch_idx = {'red': 0, 'green': 1, 'blue': 2}[single_channel_behavior]

gray = arr[:, :, ch_idx]

else:

raise ValueError(f"Invalid single_channel_behavior: {single_channel_behavior}")

# Keep shape as HWC with C=1

arr = gray[..., np.newaxis]

# HWC -> correct layout

if layout == "NCHW":

arr = np.transpose(arr, (2, 0, 1)) # (C,H,W)

# Scale 0..1 (all AI Hub image models use this)

arr = (arr / 255.0).astype(np.float32)

# Quantize if needed

if scale and float(scale) != 0.0:

q = np.rint(arr / float(scale) + int(zp))

if dtype == np.uint8:

arr = np.clip(q, 0, 255).astype(np.uint8)

else:

arr = np.clip(q, -128, 127).astype(np.int8)

return np.expand_dims(arr, 0) # add batch

# This model looks like grayscale, but AI Hub inference actually takes the BLUE channel

# see https://github.com/quic/ai-hub-models/blob/8cdeb11df6cc835b9b0b0cf9b602c7aa83ebfaf8/qai_hub_models/models/face_det_lite/app.py#L70

input_data = load_image_litert(interpreter, IMAGE_IN, single_channel_behavior='blue')

# === END PREPROCESSING (input_data contains right data) ===

# Set tensor and run inference

interpreter.set_tensor(input_details[0]['index'], input_data)

# Run once to warmup

interpreter.invoke()

# Then run 10x

start = curr_ms()

for i in range(0, 10):

interpreter.invoke()

end = curr_ms()

# === BEGIN POSTPROCESSING ===

# Grab 3 output tensors and dequantize

q_output_0 = interpreter.get_tensor(output_details[0]['index'])

scale_0, zero_point_0 = output_details[0]['quantization']

hm = ((q_output_0.astype(np.float32) - zero_point_0) * scale_0)[0]

q_output_1 = interpreter.get_tensor(output_details[1]['index'])

scale_1, zero_point_1 = output_details[1]['quantization']

box = ((q_output_1.astype(np.float32) - zero_point_1) * scale_1)[0]

q_output_2 = interpreter.get_tensor(output_details[2]['index'])

scale_2, zero_point_2 = output_details[2]['quantization']

landmark = ((q_output_2.astype(np.float32) - zero_point_2) * scale_2)[0]

# Taken from https://github.com/quic/ai-hub-models/blob/8cdeb11df6cc835b9b0b0cf9b602c7aa83ebfaf8/qai_hub_models/utils/bounding_box_processing.py#L369

def get_iou(boxA: np.ndarray, boxB: np.ndarray) -> float:

"""

Given two tensors of shape (4,) in xyxy format,

compute the iou between the two boxes.

"""

xA = max(boxA[0], boxB[0])

yA = max(boxA[1], boxB[1])

xB = min(boxA[2], boxB[2])

yB = min(boxA[3], boxB[3])

inter_area = max(0, xB - xA + 1) * max(0, yB - yA + 1)

boxA_area = (boxA[2] - boxA[0] + 1) * (boxA[3] - boxA[1] + 1)

boxB_area = (boxB[2] - boxB[0] + 1) * (boxB[3] - boxB[1] + 1)

return inter_area / float(boxA_area + boxB_area - inter_area)

# Taken from https://github.com/quic/ai-hub-models/blob/8cdeb11df6cc835b9b0b0cf9b602c7aa83ebfaf8/qai_hub_models/models/face_det_lite/utils.py

class BBox:

# Bounding Box

def __init__(

self,

label: str,

xyrb: list[int],

score: float = 0,

landmark: list | None = None,

rotate: bool = False,

):

"""

A bounding box plus landmarks structure to hold the hierarchical result.

parameters:

label:str the class label

xyrb: 4 list for bbox left, top, right bottom coordinates

score:the score of the detection

landmark: 10x2 the landmark of the joints [[x1,y1], [x2,y2]...]

"""

self.label = label

self.score = score

self.landmark = landmark

self.x, self.y, self.r, self.b = xyrb

self.rotate = rotate

minx = min(self.x, self.r)

maxx = max(self.x, self.r)

miny = min(self.y, self.b)

maxy = max(self.y, self.b)

self.x, self.y, self.r, self.b = minx, miny, maxx, maxy

@property

def width(self) -> int:

return self.r - self.x + 1

@property

def height(self) -> int:

return self.b - self.y + 1

@property

def box(self) -> list[int]:

return [self.x, self.y, self.r, self.b]

@box.setter

def box(self, newvalue: list[int]) -> None:

self.x, self.y, self.r, self.b = newvalue

@property

def haslandmark(self) -> bool:

return self.landmark is not None

@property

def xywh(self) -> list[int]:

return [self.x, self.y, self.width, self.height]

# Taken from https://github.com/quic/ai-hub-models/blob/8cdeb11df6cc835b9b0b0cf9b602c7aa83ebfaf8/qai_hub_models/models/face_det_lite/utils.py

def nms(objs: list[BBox], iou: float = 0.5) -> list[BBox]:

"""

nms function customized to work on the BBox objects list.

parameter:

objs: the list of the BBox objects.

return:

the rest of the BBox after nms operation.

"""

if objs is None or len(objs) <= 1:

return objs

objs = sorted(objs, key=lambda obj: obj.score, reverse=True)

keep = []

flags = [0] * len(objs)

for index, obj in enumerate(objs):

if flags[index] != 0:

continue

keep.append(obj)

for j in range(index + 1, len(objs)):

# if flags[j] == 0 and obj.iou(objs[j]) > iou:

if (

flags[j] == 0

and get_iou(np.array(obj.box), np.array(objs[j].box)) > iou

):

flags[j] = 1

return keep

# Ported from https://github.com/quic/ai-hub-models/blob/8cdeb11df6cc835b9b0b0cf9b602c7aa83ebfaf8/qai_hub_models/models/face_det_lite/utils.py#L110

# The original code uses torch.Tensor, this uses native numpy arrays

def detect(

hm: np.ndarray, # (H, W, 1), float32

box: np.ndarray, # (H, W, 4), float32

landmark: np.ndarray, # (H, W, 10), float32

threshold: float = 0.2,

nms_iou: float = 0.2,

stride: int = 8,

) -> list:

def _sigmoid(x: np.ndarray) -> np.ndarray:

# stable-ish sigmoid

out = np.empty_like(x, dtype=np.float32)

np.negative(x, out=out)

np.exp(out, out=out)

out += 1.0

np.divide(1.0, out, out=out)

return out

def _maxpool3x3_same(x_hw: np.ndarray) -> np.ndarray:

"""

x_hw: (H, W) single-channel array.

3x3 max pool, stride=1, padding=1 (same as PyTorch F.max_pool2d(kernel=3,stride=1,padding=1))

Pure NumPy using stride tricks.

"""

H, W = x_hw.shape

# pad with -inf so edges don't borrow smaller values

pad = 1

xpad = np.pad(x_hw, ((pad, pad), (pad, pad)), mode='constant', constant_values=-np.inf)

# build 3x3 sliding windows using as_strided

s0, s1 = xpad.strides

shape = (H, W, 3, 3)

strides = (s0, s1, s0, s1)

windows = np.lib.stride_tricks.as_strided(xpad, shape=shape, strides=strides, writeable=False)

# max over the 3x3 window

return windows.max(axis=(2, 3))

def _topk_desc(values_flat: np.ndarray, k: int):

"""Return (topk_values_sorted, topk_indices_sorted_desc)."""

if k <= 0:

return np.array([], dtype=values_flat.dtype), np.array([], dtype=np.int64)

k = min(k, values_flat.size)

# argpartition for top-k by value

idx_part = np.argpartition(-values_flat, k - 1)[:k]

# sort those k by value desc

order = np.argsort(-values_flat[idx_part])

idx_sorted = idx_part[order]

return values_flat[idx_sorted], idx_sorted

# 1) sigmoid heatmap

hm = _sigmoid(hm.astype(np.float32, copy=False))

# squeeze channel -> (H, W)

hm_hw = hm[..., 0]

# 2) 3x3 max-pool same

hm_pool = _maxpool3x3_same(hm_hw)

# 3) local maxima mask (keep equal to pooled)

# (like (hm == hm_pool).float() * hm in torch)

keep = (hm_hw >= hm_pool) # >= to keep plateaus, mirrors torch equality on floats closely enough

candidate_scores = np.where(keep, hm_hw, 0.0).ravel()

# 4) topk up to 2000

num_candidates = int(keep.sum())

k = min(num_candidates, 2000)

scores_k, flat_idx_k = _topk_desc(candidate_scores, k)

H, W = hm_hw.shape

ys = (flat_idx_k // W).astype(np.int32)

xs = (flat_idx_k % W).astype(np.int32)

# 5) gather boxes/landmarks and build outputs

objs = []

for cx, cy, score in zip(xs, ys, scores_k):

if score < threshold:

# because scores_k is sorted desc, we can break

break

# box offsets at (cy, cx): [x, y, r, b]

x, y, r, b = box[cy, cx].astype(np.float32, copy=False)

# convert to absolute xyrb in pixels (same math as torch code)

cxcycxcy = np.array([cx, cy, cx, cy], dtype=np.float32)

xyrb = (cxcycxcy + np.array([-x, -y, r, b], dtype=np.float32)) * float(stride)

xyrb = xyrb.astype(np.int32, copy=False).tolist()

# landmarks: first 5 x, next 5 y

x5y5 = landmark[cy, cx].astype(np.float32, copy=False)

x5y5 = x5y5 + np.array([cx]*5 + [cy]*5, dtype=np.float32)

x5y5 *= float(stride)

box_landmark = list(zip(x5y5[:5].tolist(), x5y5[5:].tolist()))

objs.append(BBox("0", xyrb=xyrb, score=float(score), landmark=box_landmark))

if nms_iou != -1:

return nms(objs, iou=nms_iou)

return objs

# Detection code from https://github.com/quic/ai-hub-models/blob/8cdeb11df6cc835b9b0b0cf9b602c7aa83ebfaf8/qai_hub_models/models/face_det_lite/app.py#L77

dets = detect(hm, box, landmark, threshold=0.55, nms_iou=-1, stride=8)

res = []

for n in range(0, len(dets)):

xmin, ymin, w, h = dets[n].xywh

score = dets[n].score

L = int(xmin)

R = int(xmin + w)

T = int(ymin)

B = int(ymin + h)

W = int(w)

H = int(h)

if L < 0 or T < 0 or R >= 640 or B >= 480:

if L < 0:

L = 0

if T < 0:

T = 0

if R >= 640:

R = 640 - 1

if B >= 480:

B = 480 - 1

# Enlarge bounding box to cover more face area

b_Left = L - int(W * 0.05)

b_Top = T - int(H * 0.05)

b_Width = int(W * 1.1)

b_Height = int(H * 1.1)

if (

b_Left >= 0

and b_Top >= 0

and b_Width - 1 + b_Left < 640

and b_Height - 1 + b_Top < 480

):

L = b_Left

T = b_Top

W = b_Width

H = b_Height

R = W - 1 + L

B = H - 1 + T

print(f'Found face: x={L}, y={T}, w={W}, h={H}, score={score}')

res.append([L, T, W, H, score])

# === END POSTPROCESSING ===

# Create new PIL image from the input data, stripping off the batch dim

input_reshaped = input_data.reshape(input_data.shape[1:])

if input_reshaped.shape[2] == 1:

input_reshaped = np.squeeze(input_reshaped, axis=-1) # strip off the last dim if grayscale

# And write to output image so we can debug

img_out = Image.fromarray(input_reshaped).convert("RGB")

draw = ImageDraw.Draw(img_out)

for bb in res:

L, T, W, H, score = bb

draw.rectangle([L, T, L + w, T + H], outline="#00FF00", width=3)

img_out.save(IMAGE_OUT)

print('')

print(f'Inference took (on average): {(end - start) / 10}ms. per image')

4️⃣ Run the model on the CPU:

python3 face_detection.py

# INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

# Found face: x=120, y=186, w=62, h=79, score=0.8306506276130676

# Found face: x=311, y=125, w=66, h=81, score=0.8148472309112549

# Found face: x=424, y=173, w=64, h=86, score=0.8093323111534119

#

# Inference took (on average): 35.6ms. per image

This already brings down our time per inference from 189.7ms to 35.6ms.

5️⃣ Run the model on the NPU:

python3 face_detection.py --use-qnn

# INFO: TfLiteQnnDelegate delegate: 1382 nodes delegated out of 1633 nodes with 27 partitions.

#

# Found face: x=120, y=186, w=62, h=78, score=0.8255056142807007

# Found face: x=311, y=125, w=66, h=81, score=0.8148472309112549

# Found face: x=421, y=173, w=67, h=86, score=0.8093323111534119

#

# Inference took (on average): 2.4ms. per image

🎉 That's it. By quantizing this model and porting it to the NPU we've sped the model up 79 times (!). Hopefully you have a good idea of the qualities of AI Hub, and the potential power of the NPU and the AI Engine Direct SDK. You're not limited to Python either, f.e. the LiteRT page has C++ examples as well.

Deploying a model to NPU (Edge Impulse)

Image classification, visual regression, and certain object detection models can be deployed through Edge Impulse.